TL;DR: Investigating the use of VECTORs in Oracle 23ai, I stumble across all kind of (python) models. Some of those, pre trained, models think they can recognise my photos. Some Surprises. But I am still nowhere near my end-goal: text-searching my pictures. But having the data in Oracle 23ai helps. I can use SQL.

Background

I'm on a queeste to search through my own photos using a text-query, like "photos with pylons in them?". So far I successfully managed to store images and VECTORs generated from the images. Searching image-to-image works OK: Give it an example-image like pylon.jpg, and it finds similar, resembling images. But it is hard to find a model (a vectoriser?) that allows text-search.

In the process, I'm finding other interesting things about "Artificial Intelligence" and the use of python and Oracle. For example, there is "AI" that can generate captions, descriptive text, to the images you give it. So, Let's Try That.

But let me insert somc caution: Automated captions, Labels, are not always correct. some of them become funny. Hence...

Yes, the warning is there for a reason.

Caption-generation: halfway to searching the images?

If an "AI" can generate descriptions of my pictures, that would be a step in the right direction. And I came across several models that pretend they can. A lot of my searches lead to the Hugging Face site (link), where you can find descriptions of the models, example python-code. And where the necessary "data" from the pre-learned moded can be (automatically!) downloaded.

Not all models can be made to work as easily as the blogs/pr-posts or example-code suggest, but I got a few to work fairly quick.

Disclaimer: A lot of this is "black box" to me. And when someone asks me for details, I might reply: "I have No Idea, but it seems to work".

Found a model that "worked" for me

The one that seemed to work calls itself "Vit-GPT2-coco-en" (link)

ViT-GPT-2 : Vision Transformer - Generative Pretrained Transformer 2

(Yes, I found later that there is are GPT-1 and a GPT-3 and -4. In my search, I just happened to stumble an implementation of ViT-GPT-2 that worked more or less effortlessly in my situation - I might explore higher versions later, as they look Promising).

I took the example code, did some copy paste, from this page (link), and managed to generate captions for my stored-photos. To use this model, I needed the following at the start of my py-file:

This tells python to use the transformers (a way to run a model, I think) and it sets three functions(fuction-pointers): feature-extractor, tokenizer and model. Those functions will be used to "extract" the caption.

With careful copy/paste from the example-code, I constructed a function to generate the caption for an image. The function takes the file-path to the image, and returns the caption as a string:

Notice how it calls the three functions defined (imported) earlier. The actual "magic" is packed inside these three function. And inside a set of data, which is the "pre-learned" part of the model. On first call, you will notice that the transformer-functions will download a sizeable amount of data. Make sure you have a good+fast internet connection, as the amount of download-data can be considerable, sometimes several GBs.

For more information on the transformers, you can study the Hugging Face site. For the moment, I just accept they work.

Once I got it to work, I added a table to my datamodel to hold the captions, in a way where I could possibly run multiple models and store them in the same table based on the model_name.

(Yes, yes, I know, the model-column should relate to a lookup-table to prevent storing the name a thousand times.. Later!).

Then I just let the model loop over all my 19500 photos, and waited... Almost 10hrs, on my sandbox-machine, a vintage MB-Pro from 2013.

Note: I'm deliberately Not using any Hosting-provider, a Saas, or an API-service, out of principle. I try to keep my source-data, my pictures, on my own platform, inside my own "bricks and motar". I fully appreciate that a hosting-provider or cloud-service, or even an API, could do this better+faster. But I'm using my own "Infrastructure" at the moment. I even went so far as to run the caption-generator for a while without any internet connection, just to make sure it was "local".

The Result: Captions.

The resulting text-captions turned out to be primitive but often accurate descriptions of my photos. Here are some Queries on them:

It is clear that most of my pictures contain a "motorcycle" in various locations. No mystery there. Let's clean that list by adding

Where not-like '%motorcycle%'

Now the highest count if of some "metal object with hole" ? Some folks may already guess what that is, as I ride a certain type of motorcycle...

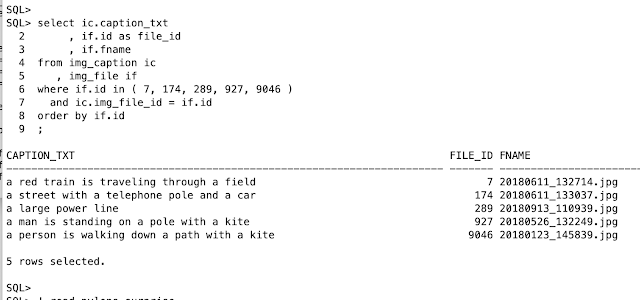

But more in detail, let me go back to a previous blog (link): I had identified 5 pictures with "powerline-pylons" on them, and the vector-search had correctly identified them as "similar". What would the caption-algorithm think of them? Let's Query for the captions of those 5 pictures:

Hmm. Only one of the five is correctly identified as a power-line. Check the pictures on the previous blog. The two findings of a kite are explained from the triangular-shape combined with the lines leading to them. The train... was not a train, but could be mistaken as such, sort-of.

Main Finding (and I checked other pictures as well...): There are a lot of Wrong-Captions in the list. Doing a check for "false positive" or "false negative" would be rather time consuming. So while some of the captions were Surprisingly Accurate, there is a lot of noise in the results still. Hence the "Warning".

Oh, and that "metal object with a hole" ? It was the Oil-window on my engine... I tend to hold-down the phone to take a picture of it Every Morning to have an idea of oil-consumption between maintenance. I mostly delete the pictures, but when I keep them, they look like this:

Correcty Identified ! And no worries, the modern engine of a BMW-R1250RT uses virtually No oil between maintenance. But it is a good habit to check.

There were several other Surprising "accurate finds" and a lot of "funny misses", but that is not the topic of this blog. The use of (this-model) caption mechanism is helpful, but still rather crude, and error-prone.

Learnings.

Python: At this stage, I learned about python (and Oracle). Not much vector-logic happening at my database, yet.

Models, AI.. : I learned there is A Lot More to Learn... (grinning at self...). Others are exploring the use of ONNX models which can be loaded into the database (link). I am sticking to python to run my models. for the moment.

Hugging Face: I got quite skilled at copy-paste of example-code and making that work. The HF site and how it enables use of models was quite useful to me (link again)

Detail on housekeeping, cached-data: The models download a lot of data (the pre-learned-knowlege, possibly a neural-network?). By default this will be in a hidden directory stored under your home-dir : ~/.cache/huggingface. Relevant in case you want to cleanup after testing 20 or so models. My cache had grown close to 100G. It is only cache, so if you clean-up some useful data by accident it will re-download on the next run.

Generated Captions: Sometimes Surprisingly Accurate, sometimes a bit hmmm. Results might say something about my picture-taking-habit, not sure what yet, but I may have to diversiy my subject a little. Too many pictures of motorcycles.

Converged Database: Give it any SQL you want.

Having the data in an RDBMS is still a large blessing. Inside a Converged Database (link), you have the liberty to combine data from any table to look for the information you need at any given time. And I Know how to formulate SQL to gain some insights.

SQL Rules!

Concluding...: Not there yet.

My original goal was (still is) to enter a question as text and have the system report back with the possible resulting images. It seems That is going to take a lot more googling and experimenting still.

No comments:

Post a Comment