TL;DR: Success! I've got several PDBs plugged in a CDB, all created "just from scripts", no "template-recovery". From here on it is all is Dead-Simple. Or is it.

Spoiler: Finished. Success. But there is Always More ToDo.

And... Need I state it: Do Not Try This at Work. (but if you Do, let me know how it went. I'm sure you can improve on my attempts...).

Update: Oracle has, on 02-May-2024, announced to rename version 23c as 23ai. This series of blogs was researched and written with the early releases of v23, when the suffix was still "c" (for cloud), but I am too lazy to go and modify all the referneces to the new 23ai (artificial intelligence).

As soon as time permits, I will repeat my "testing" on the new versions, but on first impression, it all works identically on 23ai.

Background:

In the first three "episodes", I created the CDB + Seed, added the "catalog", and added the more or less mandatory components. Now it is time to add (and maybe delete) some PDBs and see what is happening.

To avoid the use of "templates-to-recover" (which, btw, is Highly Recommended, and much more efficient), I've been creating a database manually, from scratch, just using scripts. In all cases, I have tried to choose the "Minimal Effort" and. "Minimal Configuration Work". Minimal RTFM even (which is kinda risky... You Should Always RTFM, Always).

My list of parameters is kept minimal, thus using defaults wherever possible, my typing effort was minimal, and the management of files (creation, placement, naming, deletion) is totally left to Oracle (using defaults and OMF wherever possible).

As software I'm using the "Free Developer Release v23c". But since that is an early (preview) release, not all components are "in", and the option to "generate scripts" wasn't included in the DBCA from v23c yet. Hence I used scripts generated from a v21c as examples and edited them where needed.

Because I'm still working with the list of generated scripts from DBCA-v21c (not the same version! hence use at own risk) I had to edit. In part-3, I eliminated a few obsolete items (intermedia was deprecated in 12c, and "removed" in 19c, how many years ago...). Then I consolidated everything up-to-but-not-including any PDB-creation in one Simplified script, script nr3. That worked well, resulting in 0 (zero) invalid objects. Check previous blogs for the details.

Note: I Hate long command-lines and complicated scripts, and I especially Hate hardcoded things in scripts (like 10s of repetitions of a file-path with a version-number in it). We have $ORACL_HOME and $ORACLE_BASE and $ORACLE_SID, and we have them For a Reason.

On top of that, editing is Risky Business: Typos! - Apologies if I repeat that too often.

Finally, Creating the PDBs:

Most demos and examples show how to create the CDB and then add 1, single, PDB to it. I'm going to be a little more ambitions: I want 3 PDBs and by using OMF (Oracle Managed Files) and by avoiding FILE_NAME_CONVERT parameters, I don't want to worry about Anything on the file-system.

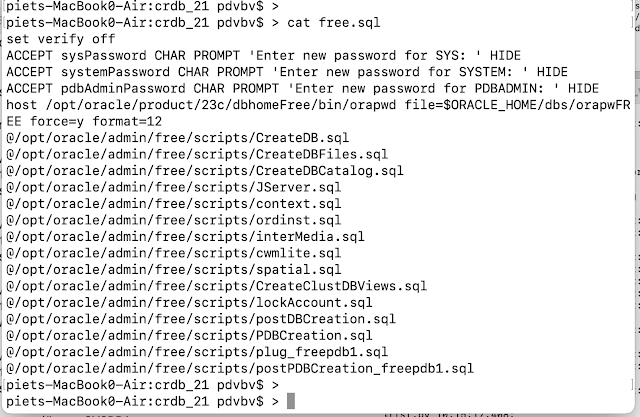

Now from the "generated" create-scripts from DBCA v21c, there are three scripts remaining. Time for another Eyeball Mark-1 Inspection, and let's see what we can do and maybe improve.

The v21c generated PDBCreation:

Strangely, this one contains little relevant items. Fine. let's move on.

Next would be the generated plug_freepdb1:

This actually creates a PDB (yesss).

With the name "hardcoded" in the script, on no less then 6 lines, including in the file-name-convert.

The spool-command seems a double (is fixable?).

The select shows data- and temp-files (can go?).

And then, Voila, we get to the Create, and Open, and Register of the first real Pluggable Database. That looks deliciously Simple, and it even announced itself to the listener straight away. That is the good part.

Hmm, yeah. I can work with that. Will still try to improve on the script though.

Last script from the generated set was postPDBCreation_freepdb1:

This one is funny. It tries to add a USERS-tablespace that already exists in the PDB$SEED (hence error!), with a hardcoded datafile-path *), hm.

That USERS-tablespace is set as default for users (Good Idea).

It then does a call to OPatch/datapatch (hardcoded 21c path and hardcoded names! what about using $ORACLE_HOME and $ORACLE_SID? ).

And it does a number of reconnects and selects, checks to report things to screen or spoolfile.

It has _only_ 11 lines with the PDB-name hardcoded in them.

OK. All Seems "Fixable" to me.

Some "good news" as well: apart from the OPatch call, these scripts are "self contained". There is no call to perl-catcon or other callout.

All I would do is to simplify a little, for Readability + Portability. Maybe, use a DEFINE or some form of arg1 (or $1 or &1) to minimise use of hardcoded names and make the scripts more portable (I'm not obsessed, not obsessed, ...)

After some more looking (on a larger screen), and some coffee, I concatenated the first two scripts and edited that into crdb4_pdb.sql:

(pre and code for crdb4_pdb)

/* -------------------------

crdb4_pdb.sql: create the first PDB(s), with minimal code + config..

notes:

- in future: define the pdb-name(s) once or pass as args.

---------------------------

*/

-- get the passwords

@accpws

SET VERIFY OFF

connect "SYS"/"&&sysPassword" as SYSDBA

set echo on

spool crdb4_pdb.log append

-- found out this "bootstrap" was needed. MOS note: 2538591.1

host $ORACLE_HOME/OPatch/datapatch --verbose

-- let's do multiple plugs straight away.

-- future: create 42 plugs in a pl/sql loop? would that work?

CREATE PLUGGABLE DATABASE FREEPDB1 ADMIN USER PDBADMIN IDENTIFIED BY "&&pdbAdminPassword" ;

CREATE PLUGGABLE DATABASE FREEPDB2 ADMIN USER PDBADMIN IDENTIFIED BY "&&pdbAdminPassword" ;

CREATE PLUGGABLE DATABASE ORCL ADMIN USER PDBADMIN IDENTIFIED BY "&&pdbAdminPassword" ;

-- only see 2 of the 3 new ones, wonder why ...

select name from v$containers where upper(name) like 'FREEPDB%';

alter pluggable database FREEPDB1 open;

alter pluggable database FREEPDB2 open;

alter pluggable database ORCL open;

show pdbs

alter system register;

prompt ---- End of crdb4_pdbs. Please check, properties, files, listener etc... -----

spool off

Here is what I hope to achieve:

There are no file-paths at all, I'm not going to mess with files if I can help it.

The remaining commands are readable (even on 80x25 terminal) and straightforward understandable, I hope.

On testing, I (re-)found that OPatch/datapatch needs a "bootstrap" (MOS note: 2538591.1, known issue...). I'm doing that before any further PDB-creations.

I then create and open the PDBs.. no big deal actually, and I register them with the listener. The select-checks and the can wait (for next script).

In my current script I am left with just 3 PDB names, which are "hardcoded" 2x each. That still makes 6 items to correct later. ;-(.

That user-tablespace, the selects and checks from the postPDBCreation, I'll isolate in a separate check-script, to be run per PDB. One day, I'm hoping for a generic, portable script to "add 1 PDB" to a CDB, in the simplest fashion, for now, I'm in a hurry: I want my PDBs, to observe and play with...

Bonus "feature": I _could_ try run these last script even from just-a-client when connected to the CDB$ROOT, but it would mean ignoring the datapatch and editing every "connect". Too Lazy at the moment. But good to know it can be done (yep, I checked, later, works fine, but ignoring datapatch, so maybe not recommended)

I'm impatient, Let's Do This:

If you are like me, you might check disk-usage on "the server" and check the listener status, before and after. But was in a hurry...

The datapatch part went down fine:

And the creation + opening:

You can see the creation of 3 PDBs, with not drama at all. One of them has the "classic" name ORCL, just because I can. I do now wonder if I can't find a way to leave out the admin-user part as well. I also left the original "select" statement from the generated script (hint: something about a name "hardcoded" maybe).

We then witness the OPEN-ing of each PDB and if you run the script for yourself, the command "show pdbs" will display them, all of them this time. In the end there is also a "register" to let the listener know what was added.

If you go back and check your filesystem and listener: Creating will have taken up about 3G of diskspace, and the listener will show the new PDBs as services.

Checking from a "client machine" to connect over the listener:

Of course, from a client machine, I can connect to any of the open PDBs. Just to show how Easy.

Almost Done...

We now have 3 PDBs and I can play with them. Nice. I got more or less what I wanted. We are Nearly There.

Just a little bit of housekeeping to do: remember that postPDBCreation.sql? It did set the default to USER-tablespace, did OPatch and did a number of selects. I am going to respect the same commands and same order of events, more or less. But I have edited that to become a script to be run per-PDB, with the PDB-name as argument (the &1 in the oracle sql-script world):

The script crdb4_postcheck.sql:

/* ---------------

crdb4_postcheck.sql pdb_name: set user-ts, do OPatch and run some selects

arg1 : PDBNAME to check, e.g. FREEPDB1 or ORCLPDB2

notes:

- adapted from postPDBCreate_freepdb1.sql, as genrated by dbca-v21c.

-----------------

*/

-- pick arg from cmdline.

define targetPDB=&1;

-- get the pwds, we need m

@accpws

-- stmnt and their sequence-order copied from generated postPDBCreate

SET VERIFY OFF

connect "SYS"/"&&sysPassword" as SYSDBA

spool crdb4_postchecks.log append

alter session set container="&&targetPDB";

prompt Adding + setting dflt tablespace USERS, If needed...

set echo on

CREATE SMALLFILE TABLESPACE USERS LOGGING

DATAFILE '/opt/oracle/oradata/FREE/freepdb1/users01.dbf'

SIZE 25M REUSE AUTOEXTEND ON NEXT 20M MAXSIZE 1G ;

ALTER DATABASE DEFAULT TABLESPACE USERS;

-- next, the call to OPatch, added OH and SID

host $ORACLE_HOME/OPatch/datapatch -skip_upgrade_check -db $ORACLE_SID -pdbs &&targetPDB

-- queries, copied straight from genereated v21c original.

-- not sure if essential

connect "SYS"/"&&sysPassword" as SYSDBA

-- expect TRUE

select property_value

from database_properties

where property_name='LOCAL_UNDO_ENABLED';

connect "SYS"/"&&sysPassword" as SYSDBA

alter session set container="&&targetPDB";

select TABLESPACE_NAME

from cdb_tablespaces a,dba_pdbs b

where a.con_id=b.con_id and UPPER(b.pdb_name)=UPPER('&&targetPDB');

connect "SYS"/"&&sysPassword" as SYSDBA

alter session set container="&&targetPDB";

set echo on

Select count(*) nr_valid_DV

from dba_registry

where comp_id = 'DV'

and status='VALID';

-- go back to root.. why?, does exit straight after..

alter session set container=CDB$ROOT;

exit

And ... running that script, 3 times, Went Fine: the USER-tablespace came from the PDB$SEED, it was already there. If it is ever missing, the error will notify us. Setting the default tablespace is no problem, and running Opatch/datapatch works Fine. The last select, checking for DV (DataVault, installable per catols.sql) isnt present here because it wasnt included in the "generated scripts" for v21c. Something I could correct, but was too Lazy...

Plus, I can now Rapidly provide dev/test systems (mostly containers) and customise them to my hearts content...

Also: (in scope, but this blog is too long already): Dropping a PDB including datafiles, gives you back the disk-space immediately, which I think is quite nice.

I've run most of my ppt-demo SQL scripts, and they work fine agains 23c! (I had not expected anything else). I can use this container in my next presentations and be one of the Uber-Hip crowd using v23c. How Cool is That?

I would say: This is Success !

So in summary, I was able to create several PDBs, with minimal configuration and minimal typing-effort. Mostly due to the OMF parameters in my spfile and using as many defaults as I can.

My main "obstacle" was actually the "typing effort" in crdb3_com.sql: installing all the various components. And the testing and removal of typos to make the whole exercise look even more Simple.

I will now sagely, as a well behaved Oracle-DBA, wait for the actual GA (General Availability) of v23c. A GA which hopefully still comes with a DBCA that can generate scripts that I can read and alter.

Warning: writing this up took me much more time then anticipated. Actually creating the system was easy. But tweaking the scripts to be 0-error, and Portable, and chasing down some of the nitty-gritty details took waay too much time... Anyway, it was interesting enough to do it. Maybe it will be useful knowledge one day.

The List of "Always More ToDo"...

I wanted a quick-create, .. reading + investigating turned out 4 waaay to long blogposts... And ... There is always more. The list got so long it would merit a separate blog+discussion.. Here are some of the items you can think about, or maybe I'll do some writeups later.

Experiment with Read-Only Oracle-Home. Ensure maximum integrity of the software-tree. Separate Software from Data was always a good concept.

Experiment with mounted volume and then store the databases, and the admin- and the admin-output (diag!) on a mounted-volume. That could make the container much smaller still. (This would be my Prio if I was deploying via Containers...?)

Completeness.... I'd really like to see the scripts generated by v23c to make sure I got all the components and activities Correct: complete and in the right order etc... Up to now, this is just a hobby-project with a lot of Guesstimation involved.

Directory-names: I dont like the overly-long generated names for PDB-subdirs. I'll have to investigate the "simplest possibly" way to use FILE_NAME_CONVERT...

The UNDO=Local setting merits some investigation. Can that save me more effort+space? What is best/easiest, and when? For the moment, I'll just point you to Tim "Oracle-base" Hall for reading up on the topic.

Housekeeping, files: My "disk system" got full a few times during these experiments. There are MAX_SIZE clauses for data, audit and diag, and those Will be Useful. Alternatively: Check your subdirectories under the "diag" tree, and the output-log-dir for catcon. Especially when providing "dev-/test-containers" to developers, you dont want your system to run into "mysterious errors" due to disk-full problems.

Housekeeping, drop PDB: Demonstrate how dropping a PDB frees up disk-space, Automatically. Lazy-me is Really Happy with this (OMF-)feature. But dropping a PDB leaves an empty subdirectory. Not a big problem, but I'd rather have that cleaned anyway. Requires another trick (script).

Script-beautifying: Evidently. Also, the original (generated) scripts do a lot of (re)connects and spools.. I can still make my scripts cleaner by removing seemingly useless "connect" and housekeeping stmnts. (I like small, clean scripts...).

LockAccount: The crdb3_com.sql now contains a copy of the generated code. On close inspection: this is identical, repeating code. Make it a call to a script.

Make a single, better, crdb4-script: a Simple script, taking the PDB-Name as input, should be able to add (or later remove..) a PDB, including the OPatch and some of the checks.

The OPatch, needed a bootstrap. Doesnt seem like a critical problem to me, but would not discard it. Easily Fixable, MOS note: 2538591.1. Best add that to crdb3_com at some point (after CDB, but before 1st PDB).

Test a patch or an upgrade: This will Inevitable need to be done sometime...

On perl: I'm still not very happy with the perl-stuff as it is yet another component, and yet another dependency. The scripts have a hardcoded path to the oracle-provided perl-executable in them. And another, different-version of perl is found in /usr/bin, which apparently the scripts don't trust or don't want you to use. It makes sense, but it is a bit cumbersome.

But in the end: Happy!

At least I got my kick of creating a Database from Scratch using scripts. I got the learnings, now documented in a set of scripts. And my scripts seem Portable enough to be able to create a v23c DB on just about any (linux)platform (VMs, containers) even without the templates.

If anyone wants a copy: copy/paste from the blog seems to work, or drop me a message for the repository.

------------ end of part 4 -------------

Disclaimer etc. ... nah.

And remember what I wrote earlier: Dont Do This At Work (or... not yet).

*) Footnote:

On the use of hardcoded-file-path-name for database-files (do I have to say this..?): How often did you end up with one or two files (crucial, needed datafiles) in a rogue location because of forgotten or badly-replaced filenames in re-used scripts ? Folks have overwritten files because of this, folks have ended up with "windows file-path-names" in the DBS-directory of a linux-systems bcse of this. (do I have to do a blog on this with bad-examples... )

Minimise hardocoded paths, please.

-------- the Real End of Part 4 ------- Maybe parts 5, 6 etc., but Later --------