TL;DR: The new DOMAIN type in Oracle23ai can be used as LoV. And in some cases that can save you Effort: if it does not have to visit the FK-tables. I'll show some examples, and I'll demonstrate a few pitfalls to avoid.

Background.

It all started when someone suggested to use DOMAINs of type ENUM as an alternative for FK-tables with List-of-Value lookup-data. For example, if you want your table to have a field "color", limited to just the colors of the rainbow. You can use a check-constraint (simple!), or a table with color-values and an FK-constraint, or in Oracle 23ai, you can use a DOMAIN. We will compare ENUM-domains with t FK-LoV tables.

Datamodel: a fact table with two LoVs

For this little demo I will use an old-fashioned datamodel with two lookups tables, and one long fact table. And the queries on that 3-table model will be compared to the same queries against a single table where the LoV are held in two DOMAINS of type ENUM.

First the traditional model looks, it like this:

(I love a primitive diagram...)

And the Fact table is defined as follows:

All very Classic, Data-table with LoVs and Foreign Keys. The FK are the "constraints" that ensure correct data. I also put indexes on the FK-fields, but you could ommit those.

Now if you want, you can replace those FKs with DOMAINS of type ENUM and use that for the column-definitions:

I stated by defining two DOMAINS, the color-one looks like:

I created a similar domain with 1400 Roman Numerals (too long to show, but there will be a complete re-play script at the bottom of the blog).

Now create the table and use those domains:

Note how two of the columns have their type defined by a DOMAIN-ENUM. There is no FK, and you can leave out the LoV tables completely. But the values for the columns are now Constrained by the domain-definition. For good measure, I created indexes on them, as I expect to be "searching" for those at some time.

We filled both tables with Identical data, and did a generate_statistics on them. Now we are ready do some some comparisons.

Aggregate Queries

Let's try to aggregate some color-records, and have a Query that _needs_ to visit the table (otherwise, the optimiser could sneakily just only use an index):

Access is via Index-full-scan and table-access full. Join is done by hash-join (more efficient than Nested Loop in this case). Looks like expected behavious. I happen to know that the LoV table t_color_lov is 8 blocks, and the data-table is 768 blocks in size. Check the statistics of the query:

Just over 700 gets. More or less as expected as there will be some empty blocks in the table.

Now try this using the table that Does Not have FKs, but is using DOMAIN. First the explain:

We see 1 Full-Scan of the table. No other tables involved, because the constraints are done by the DOMAIN, not by FKs.

The query-stats look like:

Maybe somewhat to our surprise, there is little gain here. Both queries are Equally efficient. The 7 records for color-LoV probably fit into 1 block, hence the case of the "enum" is consuming just 1 block less.

[ soap-box: ]

Now, YMMV, because I heard of ppl who got bitten by "too many LoVs, too many joins". And they claim to fix this with either Check-Constraints or simply removing the FK-constraints. But I would Strongly Suggest to examine your Explain-plans first. The optimiser is well-capable to Scan the small tables just-once and use hash- or merge-joins to avoid (nested)Loops. Domains are, IMHO, not a way to solve those bad-queries (but they could, potentially...)

[\steps off soap-box]

I also think Connor McDonald, and others have previously proven, sufficiently, that : Joins, Good Joins, Well Done Joins, ... Are Not Expensive.

And in the case of relatively Large data-tables, and retrieving "a lot of records", the elimination of Joins to small tables by using DOMAINS will only yield very limited benefit (1 block out of 700...).

Single-record retrieval queries.

Let's compare what happens when we retrieve just 1 data-records. The relative overhead of joining to LoV tables might weigh a little heavier on those "precise" cases. We are retrieving Two records and want to see color and roman (e.g. get those from the LoV or from te domain):

We notice the unique scan to retrieve the two records from data_2lov, a full-scan on the color_lov (7 records, effectively just 1 block) to pick out the 1 record needed, followed by the use of the unique-index on roman_lov to retrieve the roman-numerals, one for each record. The predicate informationd confirms this.

Then we check the statistics, they show 13 block-gets:

By many criteria, 13 block-gets is an Efficient statement.

Note for SQL-geeks: Yes, There is room for minor improvements. By using a "covering index" on the data_table. Or from the use of IOTs. Maybe, with some extra indexing, this query could be brought back another 3 block-gets (eliminate 1 step for every table) to 10 gets. I consider that out of scope for now as not a lot of systems seem to go that far in optimising. But for critical systems I would recommend use of IOTs (Index Organized Tables, docu here) for the LoVs. Should we add a demo of Extreme-Indexing... ? (just for the fun of it...?). Maybe Later (reserve space for link to script).

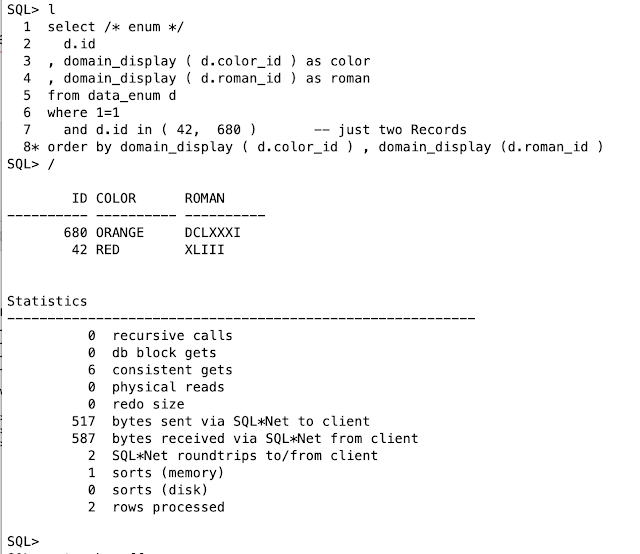

Now let's retrieve the same info from the data-table using the DOMAINS:

The SQL and the Explain-Plan show only One Table involved this Query. The color and the roman are retrieved from the domain_display ( column ) function. For good measure, we added the same order-by as in the previous query.

The Statistics now show :

Only 6 gets. Because we only need to use the PK to retrieve each record. Probably two index-blocks (index-root and 1 branch) and one table-block (by index rowid) for each of the two numbers in the in-list. Total of 6 gets.

Hence, even though the original effort per query was low (13 gets is an efficient query by most standards), the savings are Significant: We reduced the gets by half. And if you have more of those LoVs per record, those savings can add up.

Note: Possibly you get even more benefit, notably if your datamodel is missing some indexes. Or if you have stale/wrong/missing statistics whereby your optimiser is "deteriorating your query-plans". In those cases, the elimination of LoV joins will (appear to) help. But in those cases, you have other (datamodel + optimiser) problems!

But in short: the elimination of some joins, especially in complex or high-frequency SQL may Benefit from the use of DOMAINS. QED.

My interpretations (and ymmv...)

1st: For Large, Aggregate-type queries, there is No big Advantage.

On lage queries, where you scan significant parts of the "data" or fact-table, the additional, single, Full-Scan of the lookup-tables is probably negligible. Small compared to the effort of scanning the data-table. Hence if your work consists of lage, aggregate-type queries, there is little gain in avoiding LoV tables. Provided you have your indexes done well and statistics up-to-date. Also c.f. Soap-Box statement above...

2nd: Possible Gain on fetching small-sets or Single-Records.

On retrieval of small sets, or individual records, Especially if you have to retrieve many LoV-values, the additional table-access for the LoVs Can be a larger part of your Query-effort. And here you can save on the additional Hop(s) to the LoV table(s). This can be beneficial If your work consists of a lot of small, OLTP-style SQL-statements where you need to access LoVs Many and Often. Again, providing you have you indexes Done Correctly.

3rd, Note: I have some remaining notes on using domain-expressions in where-clauses as well. Maybe put those in a followup to prevent a "too-long-read".

Closing Remarks.

In summary, I think the use-case for "reducing effort on LoV" should be focussed on the cases where the LoV is a relatively large part of the total-effort. That is mostly (if done correctly) the case in retrieving small sets or high-frequency, quick-verifications.

So there Is in specific situations a Good Case for using DOMAIN-ENUMs.

-- -- -- -- -- -- -- -- -- -- -- -- -- --

demo_enum_4select.sql : the complete script, just hit enter 9 times.

demo_enum_4extreme_index.sql : remind me to add this if I get there...

No comments:

Post a Comment