TL;DR: In Oracle23ai, the DOMAIN type is a nice addition to implement more sophisticated Constraints. Someone suggested the DOMAIN of type ENUM as a column-constraint as an LoV. Works like a charm. But the behaviour of some where-clauses may not be what you think. Let me show you some very simple examples, and some workarounds, both bad and Good.

A prism, just because I used colors as an example.

Background

After seeing a presentation on the use of ENUM-Domains for use af List of Values on a column I did some scripting to see how it could work (previous blogs here + here). Now I tried to use it in Where-Conditions.

So I have a few domains, one of which is color:

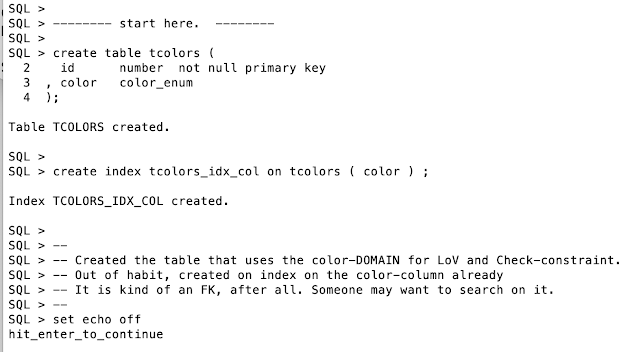

And I created a table that uses the domain in a column-definition, like this:

The column is defined as type "color_enum" and thus inherits the "LoV defined in the Domain. As I consider the color-column to be akin to a Foreign-Key, I have already created a index on it.

When I ask for the INFO of the table, using SQLcl, this is what I get:

The column COLOR apparently got defined as number(38,0) e.g. an Integer, because it can not hold decimals. But the same column also has the DOMAIN specified as COLOR_ENUM. The info-command also shows I have indexes on both the ID an don the Color-column.

Note: I'm using SQLcl (link) here. In SQL*Plus you would need to do a DESC of the table. Latest versions of SQL*Plus will show the numer + domain, but older versions will just show the column as number (38,0).

Do some Inserts

We need some data in the table so let's...

Notice how I can use both the enum.name, which is effectively a set of Constants, or an actual numeric value to insert data. I did not have to visit an LoV table to get (read or join) my values.

Just curious: how does it handle "wrong values":

There is no "purple" defined in the Domain-Enum and an error pops up. But the description of the error is a little vague in my opinion. It seems to read "color_enum.purple as a reference to a column. I think the actual error here is more of an "invalid value" or "check constraint violated". But I can see why the error is what it is.

And what if we try to insert a number out of range:

When trying to insert a number outside the valid range, the Error is more Clear. It refers to a "check constraint" and it mentions the DOMAIN. This should give even a developer enough clues to fix it.

And we have Proven that the Domain acts as both Check-Constraint and LoV. It is very much similar to an FK (as tested in previous blog as well).

Next is doing some Retrieval.

Selecting from the table.

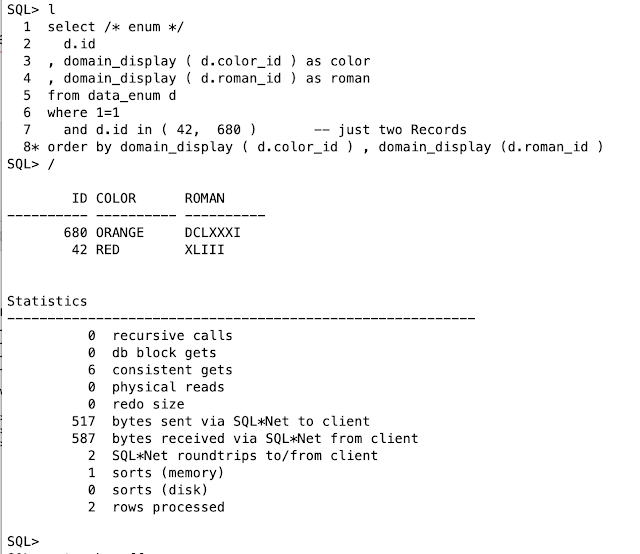

The result of our inserts is a table with the following data:

No surprises here. But notice again that the columns "id" and "color" are numbers. and we need a display function to obtain the "name" of the color.

Now suppose we want to search for records by the name of the color. We need to put the condition against the domain_display():

Notice the Table Access Full. But also notice that the filter, the condition on the display-function. It seems to employ a CASE-When statement to pick up the values from the DOMAIN.

I suspect that the use of the function to find the value of like 'RED%' is akin to a type-mismatch (e.g. comparing varchars to numbers), which stops us from using the index on the numeric column color. But a Full Table Access can be a bad thing on large tables.

It works much better if we use constants or plain numbers to look for our records:

If the types of the comparison matche the indexed, numeric, value the query Can use the index. And this is the desired outcome.

The Warning is Clear: Do No Use that function in a search-criterium. Unless...

Function Based Index?

Because the domain_display () is a function, we could consider adding an Function Based Index. Let's try that:

That works. With the FBI the where clause has an index that machtes the domain_display, and uses that index. Maybe this is what you need. But I am generally hesitant to add more indexes. I would prefer to use matching types in the where-clause: constants, or other numeric conditions.

More Exotic: join the DOMAIN:

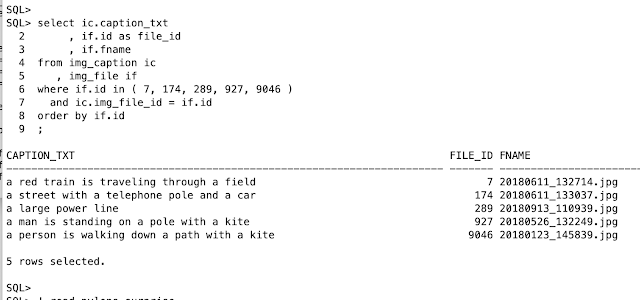

For a more exotic idea: you can use the ENUM-domain as a record-source in the from clause, and add a join to the Query. Not that strange, because this is what you would to when you were using an FK-Constraint anyway:

And it works. Apparently I can use the domain as a record-source with no problem. Let's have a look at the explain-plan:

There is a list of "filter" operations on the domain, but the query does use the intended (numeric) Index. This is how you would to it when the constraint was a classic FK to an LoV table. And this still works as well.

Summary: DOMAIN-ENUM can be used as LoV.

The DOMAIN can act as LoV and FK. They bring both the "check contraint" and provide readable "constants" for use in code, without the need for a separate table to keep the values. In some cases, this will bring you a reduction of IO-Effort and make your queries more efficient (see earlie blogs). And the same limitations apply when searching for the "desciption of the LoV".

Advantage or not: You cannot mess with a Domain as easily as with a table. A DOMAIN cannot be edited in the same way as an LoV table (which I have been know to edit in a live system...).

Furthermore, I showed a workaround with an FBI. But I do Not Recommend putting Function-Based-Indexes on domain-columns indiscriminately. Every extra indexes should be given careful Consideration.

Oh, and: RTFM

There is a lot more on DOMAINS than just LoV. Domains can/should (IMHO) be used for Sophisticated Constraints on one or more (groups of) columns. Do Go check the Documentation !

Or check this page by Tim for copy-paste usable examples. After all: RTFM = Read Tim's Fantastic (summary of the) Manual (link).

Enjoy.

-- -- -- -- -- -- end of blogpost, appendix below -- -- -- -- -- --

Appendix:

Script to replay the demos..

demo_enum_5where.sql : just hit enter several times to walk trought the demo.

Happy Coding (Tip: just use SQL wherever you can!)