TL;DR: Using Oracle23ai and python, I wanted an SQL-function to produce the "epoch" value similar to a python call to time.time(). And of course I wanted the fastest possible version...

Oh, and a note on "Usage": Preferred usage is in a CTE... You'll see.

The image is an atomic clock from the National Institute of Standards and Technology (NIST). One of the most Exact Clocks in the world.

Background: Using python time module for measuring time.

From the unix "date" command, we can get the epoch, which is defined as the number of seconds since 01-Jan-1970 (link):

date +'%s'

That number looks familiar to anyone who has used epoch-values. And now we have python, it tries to do better and adds the fractional seconds to that value:

time.time()

Having such precision was very tempting. It was much more precise than the old-fashioned SYSDATE that I used in most of my legacy-scripts. And since way back in version 9i, Oracle has SYSTIMESTAMP, which is potentially accurate to the nanosecond (link to Oracle doc).

Here are three terminal windows to compare the options :

At the top, the terminal window shows the unix/linux-date, it returns whole seconds, with no factional component.

Middle-window: Python pretends micro-second accuracy (and the time-module even has some nanosecond-functions if/when the hardware supports that accuracy).

Lower-terminal, SQL>: Oracle _can_ do fractions of a second up to 9 decimals with the FF9 format-mask, but it is actually more like 6 decimals, e.g. microseconds. Still not bad.

So with SYSTIMESTAMP, the basis is there to build a function that produces the same kind of number as python: a floating point number of epoch-seconds, with decimals that go down to milli-, micro-, or even nanoseconds.

The function: use SYSTIMESTAMP to produce an epoch number.

My first attempt was relatively simple and explicit: Calculate the large nr of seconds up to today-midnight, then add the (precise) number of seconds (and decimals) that have passed since midnight. Call that version one, f_epoch1

Note that I use a two-step process, because TO_CHAR does not have a format-maks that covers "epoch seconds" (not yet?).

First I calculate the nr of Seconds from Epoch up until midnight-today.

And then I use a format mask to determine the number of seconds (with fraction FF9) from midnight up until Now.

Adding the two gets me the Epoch, with fractional seconds, similar to python.

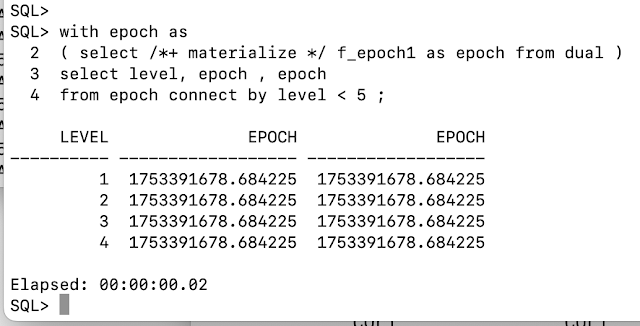

And that seems to come out nicely. Here are the first tests:

Notice the first query: select f_epoch1 from dual. Seems fine, and the value is close to what my linux date +%s command produced.

Now notice the second query, multiple-columns and multiple-rows query: Selecting multiple f_epoch inside one query yields Different, Increasing, values for the epoch. The function is evaluated multiple times, and the order is from left-to-right and from top-to-bottom.

This May be Counter Intuitive, and even Un-Desired !

Remember that SYSDATE and SYSTIMESTAMP are generally Atomic, immutable, during the execution of query.... More on that in a moment.

For the moment we conclude that every column in the query seems to be a separate call to the function, and yields a different (fractional) time

I did a python-call to time.time(), to compare outcomes. You can see from the epoch-values that I took me approx 5 seconds to type the python-commands.

This all Looks Promising: I can get an Epoch-value.

Preferred Usage: in a CTE (With epoch AS...)

Some people will not like the fact that the results of the function varies per row and per column. We are used to SYSDATE and SYSTIMESTAMP being "constant" inside a single query.

To this end, I recommend to use the f_epoch in a CTE expression so as to ensure there is only One call, and the value will be "constant" inside the query that uses it.

Here is how I intend most usage:

Notice how all the epoch-values are now Atomic, identical. And the MATERIALIZE hint is used to ensure the CTE is evaluated Before the main query, and only Once.

If an Atomic Epoch is what you want / need / expect, you should to be Aware of this!

(there is no relation with the atomic clock in the image above, but still...)

Testing: Execute it a few thousand times...

As Oracle-geeks, we are obsessed with "performance", and the first thing I wanted to know: how fast is this. Or: how many CPU-(micro)seconds is this call going to take.

Here are some queries that call this function 1000s of times...

The first query, labelled /* t0 ...*/ , is the 0-measurement, included for calibration purposes. It does not call the function, but should give us an idea of the overhead for a query that returns a number similar to the epoch.

The other queries call the function with one or more columns. Running these queries multiple times should give us an idea of how long a call to the function takes. (I suggest 10+ runs to get rid of the parsing-overhead).

We run the tests 10s of times, and check the CPU- and Elapsed-times in v$sqlarea with the following query:

The zero-query takes 955 microSeconds to return 1000 rows. That is roughly one microsecond per number-returned. Consider this as "the overhead".

The query with 1 single epoch-column takes 20,979 microseconds to return 1000 epoch-values, roughly 20 microsec per epoch-call. This is an upper-limit for the time we should find for a call the function.

The query with 4 epoch-columns takes 67,479 microSeconds, e.g. some additional 46,000 microseconds more to return 3,000 additional epoch-values. That would put the call to the epoch-function at between 15 and 16 microseconds.

The other values confirm this: the average call to the epoch-function is in the order of 15 microseconds.

Not Bad for Oracle running in a container on an old macbook-pro. But I had to try a few more things to try though: Reduce the code, and use Pragma-UDF

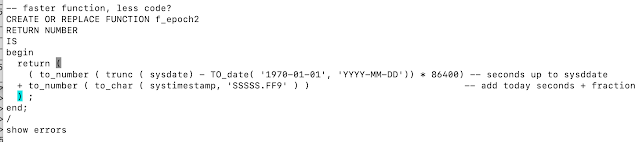

Improving: reduce the code.

First, as an old-hand-coder, I tried to reduce the number of statements in the function. I eliminated the assignment to variables and the sum of two values. With some shuffling, I could get the whole function to be one single return-statement. Call that f_epoch2:

Compared to the original, I have eliminated three variables in the declare-section, and the equivalent of 2 lines of code in the function-body.

After verification (the results still came out correct...), I could test with that new version and it was slightly faster.

Small Victory.

But there was more to try.

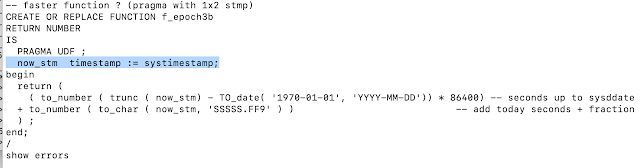

Improving: PRAGMA-UDF

When you RTFM on the use of stored-functions the standard tip is to use PRAGMA UDF (link). This should tell the compiler that you want to use the fucntion in SQL-statements and the compiler will try to optimize for that.

Hence I tried this. The same, reduced code as version 2 but with added PRAGMA UDF, and called it f_epoch3 :

And while I was looking at the code, I tried a few more things, especially around the placement of SYSDATE and SYSTIMESTAMP. I figured the less calls the better, so I came up with this:

The function now does only 1-single fetch of the SYSTIMESTAMP, and no more calls to SYSDATE. On first test, this also seemed to work well.

I now had done my compulsory "tuning + improvement" activity.

Time to Race: which version is fastest...

Every function got called in two queries and each query returned 1000 rows.

One query would return one single column (e.g. 1000 calls to the function) and the other would return 4 columns (e.g. 4 calls to the function). Here are the results..

The numbers are never exactly the same on every test, but the results (on the same machine) are always around 5-10% similar. And the order of the versions is Always the same: version 3b is the Clear Winner.

If we compare the queries t3b.1 and t3b.4, we can roughly estimate the time consumed for a call to f_epoch3b:

Every query returns 1000 rows, with either 1 call or 4 calls to the function. The difference between the queries is thus 3000 calls.

The difference in time per execute is 34,431 - 10,913 = 23,518 microseconds.

That would put the time for 1 call to the epoch-fuction at about 23.5 / 3 = approx 7.9 microsec. An improvement over the original version.

Verdict so far: 8 microSeconds to return an Epoch similar to the python time-call.

(with supposedly micro-second accuracy, -smiley-, but not really...)

Wrap up: Got it working, and got some lessons.

I got the function to do what I wanted: produce epoch-values with a suggested precision, apparently up to 6 decimals, e.g. microseconds (but not really that accurate...)

And from the various versions, I picked f_epoch3b as the winner. The seemingly fastest of the variants, and I used that code to create a final f_epoch.

You should be aware of the (non) Atomicity of the function. And if you need an atomic epoch value in a query: use the CTE and Materialize it.

The measurements, for what they are worth, show that on this system (an old MBP from 2013...), the call to the function takes about 8 microSec.

Tweaking the code to reduce statements made it somewhat faster.

Adding Pragma-UDF Definitely Helped.

As a by-product, I re-used some code (ideas) to measure SQL-queries by picking data from the shared_pool.

The code for the various functions, should you want it, can be found in the links below.

I did also try to pack the code into an SQL-Macro as well (Macros are possible as from Oracle version 21c). That works, and it is Even Faster. But that had a side effects. Topic for another blog. Maybe Later.

-- -- -- -- -- End of this Blogpost, for now -- -- -- -- --

Appendix A: The Scripts.

Feel free to copy and re-use. But dont hesitate to code your own. People tend to re-use code more if they have typed it themselves...

mk_epoch.sql : Creating the functions. File contains all old test-versions.

tst_epoch1.sql : Some queries to measure time.

tst_epoch2.sql : Comparing v1, v2 and v3, with v3b as winner...

-- -- -- -- -- End of this Blogpost, for Real -- -- -- -- --

No comments:

Post a Comment