TL;DR: Searching for RoundTrips to eliminate between python and Oracle. In this case, we "collect" the individual inserts to do them in a few large "batches". The result was Surprising, in a Good Way.

And then... Some pieces of program I would rather not have to write (re-constructing the wheel...). But Hey, it wasnt too hard, and it Really Helped.

Old Lesson (re)Learned: Row-by-Row == Slow-by-Slow.

Image: Several Cites have an efficient metro-train running next to an ever-jammed highway. I remember notably Chicago. But the view from the train from AMS airport to Utrecht is often very similar.

Background: Processing data (outside of the RDBMS), and TAPIs

Some systems want their data "processed" by python or other tools that are not part of the Oracle RDBMS (yet). Even if I think that taking data to and from the Database is generally not #SmartDB (link to asktom), sometimes this needs to be done.

But when every individual record (ins/up) becomes a RoundTrip, you will notice.

Luckily, a lot of our tables already have rudimentary TAPIs (TAPI = Table Application Program Interface). And some of these TAPI-functions caused a lot of those infamous Round-Trips.

TAPI - a Good Concept - until it is not...

The concept might be rather old (80s, 90s?), but it still serves. You'll find similar concepts in ORM-frameworks like JOOQ and Hibernate (links)

In our case, our python code will generally handle the creation (insert/update) of a record in a separate function (f_ins_item... ). These TAPIs will typically handle things like: 1) ensure there is a parent-object, or maybe create one, 2) and handle MERGE-functionality when required to prevent insertion of duplicates. 3) verify (or create) the necessary metadata or lookup-data.

This is a Good Idea, as it centralises the logic for tables in a few, easy to find, functions.

Most of these TAPI functions do their Good Work quietly in the background. In our cases, the "create/update of a record" is not the most time-consuming activity of a program, but rather the outcome of a much longer process. Not a noticeable bottleneck. Mostly.

But all of these TAPIs are single-record functions: they act on 1 record at a time. And when processing large numbers of records, that TAPI-function and the round trip(s) it does can become a time-consuming activity.

And "network" is a funny resource-consumer: you end up with both Application and Database doing seemingly "nothing" until you know where to look (In our case: AWR and application-logs-to-stdout, but this story is not about the "diagnose" it is about the "Fix").

TAPIs causing round-trips - Too Many RoundTrips.

As it was, the most executed statements on a particular system were the Inserts of "Details" in the datamodel: Records at the fringes of the ERD-diagrams that would receive 100s or even 1000s of records as+when details become known about an item (e.g. the "generic datamodel strikes again", different topic...).

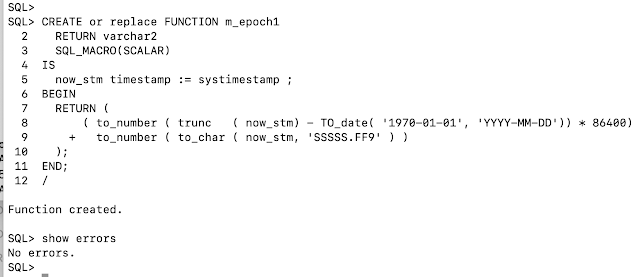

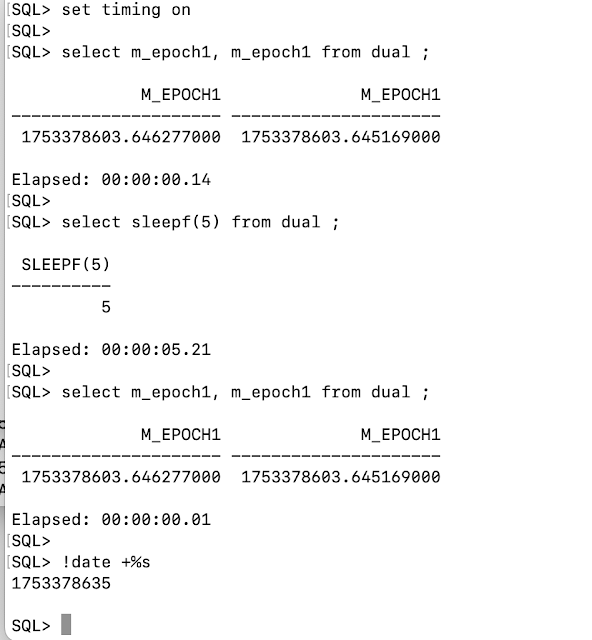

The nature of those TAPIs is 1-record-at-a-time. And sometimes that hurts. From application-log (lines with epoch-time printed to stdout) we could learn that the insert-function was often called, and was a time-consumer. The RDBMS had some notable INSERTS as "High Frequency" (top of list for "ordered by executions"), but not as notable inefficient or resource-consuming statements.

The whole picture of a slow-program, a relatively quiet RDBMS, and the AWR-numbers about executions and RoundTrips, was enough to warrant a little test.

What if we could "batch" those inserts and prove that 1-row-at-a-time was really the in-efficient part of the system ?

Test: Catching the insert of a record...

For test-purposes, I simplified the table like this (will put link to script below):

The ID and CREATED_DT get generated by the RDBMS on insert. The FKs are self-explanatory. The Real-World case is a record of some 20 columns with a few more constraints (think: optional columns for dates, times, intervals, validity, valid lat/long, various field- and record-level constraints that can take some DB-CPU to validate, but never much....). And the (average)size for records varies between 500bytes and 2000bytes, depending on the item, the source and the label.

The Original insert looked (simplified) like this:

We have a function that can be called whenever a "label" is detected for an Item. The properties of the label need to be stored, with a few FK-references and various detail-properties that go in the columns or (free-format-ish) fields of the record.

Needless to say the FKs must exist for this to work. Cases where the FK-parents may have to be inserted are more complicated. And checking of various "validations" for columns can also take code + time. For the sake of demo, this is a much-simplified example.

Notice this SQL-statement is watermarked as /* t1 ...*/ for easy spotting in tests. Watermarking can also be Very Useful in deployment. Just saying.

Also notice: this function does Not Commit. The TX-logic is done elsewhere.

In short, several INSERT statement of this nature were The Most Executed stmnts from our problem-programs...

Buffering in a list, the Simple Concept.

The fact that all(?) insert in the original version go via a single function is a Great Start. All we have to do is "catch" the inserts, collect a sufficient number of them, and then send those to the RDBMS as a single statement using something like cursor.executemany (see this good example in the docu..)

In pseudo code:

That pseudo-code speaks for itself: store new records in a list (of records), and insert them when you have a significant collection. The Inspiration came partly from what I knew about RoundTrips and previous programming effort. And from a Very Readable example that can be found in the python-oracledb doc on "Batch Execution" (link).

Two main things to check in this concept: 1) Do Not Forget to check and insert any leftover items in the list before program commits or exits. 2) Avoid errors with an Empty-list, e.g. when no records at all are in the list, stop the function from throwing an error.

Other than that: Piece of Cake, right ?

Note: Python-adepts may recognise this as "using dataframes". Very Similar. Except that at this point, I use self-coded lists for simplicity and demo. It is quite possible that our dev-team will, over time, adopt some form of data-frames (Pandas, Apache-PySpark) in future. You can lead a horse to water.... but Maybe Later.

Late-Edit: As I am writing this blog, Christopher Jones is writing about DataFrame support in the latest python-oracledb release. Check this!

Let's put it to Code:

Note: the complete, hopefully runnable, program and scripts are linked at the bottom of the blog...

We start by defining some necessary (global-)variables and constants:

The implementation will need a (global-) list to add records: itl_list

It needs a length at which to do the inserts and re-initialize the list: itl_list_max_len

And we have the SQL to do the work: itl_list_sql_ins

The SQL-statement does not have to be defined global, but putting it here cleans up the def-function code. In practice, having the SQL inside or near the function can help with coding and code-reading. You choose whatever is convenient for you.

With this in place, we can re-write the insert-functions, in two parts: First the function to catch the records:

This "insert" function no longer interacts with the RDBMS, instead it appends the records to the (global) list.

In practice, there might be additional logic to check the "validity" of the record before adding it to the list. I've left that out in this example for simplicity.

But the add2list Does check for the size of the list. And when itl_list_max_len is reached: it calls the function to insert the records from the list and to reset the list.

The function that does the actual insert looks like this:

If there is data in the list: then insert it.

Note that in this example, we do not (yet) check/re-check the validity of the data before handing it to the cursor. Any serious data-anomaly could throw a nasty error.

As return-value the function reports the actual number of rows processed by the cursor, assuming that it was the nr of inserted records.

This code "compiled and ran" and all seemed Well..

So Far So Good. But did it Help ?

Let's Race It....

To compare, I pasted together a program that will do two loops of n_sec. One loop of original, individual inserts. And another loop of n_sec of list-buffered-inserts. Let's see what comes out faster...

Note: On early testing, I started with n_sec = 120sec of inserts. The Array-insert was so fast it threw an error: ORA-01653. Good Start. I adjusted the timings downwards a bit...

So I have two while-loops that each try to insert "records" as fast as they can for _only_ 10 seconds.

The first loop does 10 seconds of "individual inserts", it uses the original call for each individual record. The results to stdout looked like this:

Notice the number of loops (records): 2109, or 210.9 records per sec. Then notice: 2113 RoundTrips (minus the 4 from program-startup). Yep, 1 RT per record. Reporting the stats and the commit will add 2 more RTs, and bring the total to 2115 RTs before the next test starts.

(Also notice, I sneakily eliminated hard-parsing to beautify my results a little... )

The second loop does 10 seconds of append-to-list, with the occasional insert-into-table when the list gets to 1000 records. Stdout report looks like this:

Wow... That 10sec loop inserted 339.339 records....? A factor of 150x more. No wonder the first test hit my tablespace-size-limit.

First verification: count (*) in the database, Yep: over 600.000 records (there was a 1st run to eliminate the overhead of hard-parses...). Looks correct.

Second verification : the nr of RoundTrips. Those 339.339 new records, at 1000 records per execute, would have caused 340 RTs.. The reported nr of RTs is 2455. And minus the previous 2115 RTs, that is ... 340 RTs. That seems to concur Very Well.

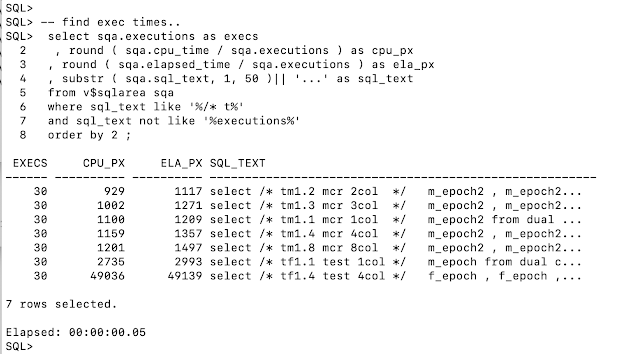

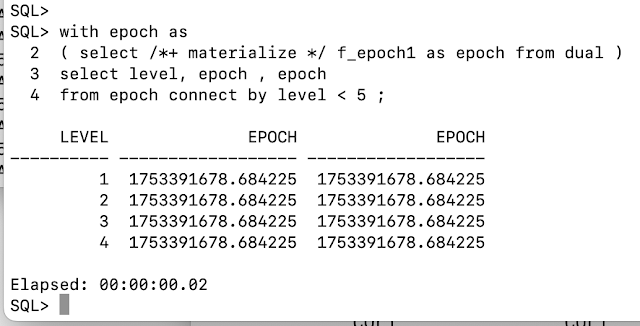

Final check: V$SQLAREA (and counts) after two(!) runs of the program:

The Statements show up in the Shared_pool, and look at those numbers:

The individual-inserts /* t1 indiv */ have consumed about 273 microseconds per execute, for 1 row per execute, or 273 microsecond of DB-time Per Row.

The list-insert, marked /* t2 list */, with 1000 rows per execute, has consumed 12,854 microseconds per execute, but with 1000 rows per execute, that is Only about 13 microseconds of precious DB-time consumed per Row.

This Thing Rocks!

Some sobering thoughts...

This is essentially an Old Lesson (re)Learned: Row-by-Row = Slow-by-Slow. We Knew this since, ah.. 1995.

The First Thing we missed with the new function was the RETURNING-ID. All of our TAPI functions so far return the ID (primary key) of newly inserted or merged record. In the case of bulk-detail-records that is not a problem. But for inserting new meta-data, new lookup-data or otherwise data that is needed for further processing, this can be an obstacle. In our case, we will only build list-inserts for records where we do not need that return-value. Minor problem so far...

Validation of records by the RDBMS, e.g. Constraints inside the Database, can be more complicated on bulk-inserts. Bulk-error processing is possible, but not always simple. With individual records, it is easier to catch errors with try ... except blocks. In our case, there are records where we dont want bulk (yet) for this reason. You decide how important this is to you.

Extra code means additional dependencies and additional (future-)maintenance. Especially difficult to argue when written to "work around a problem" rather than to add something (functionally-)useful to a system. In this case, I had to write two functions to replace the original (TAPI-)insert. And future programmers/users need to take into account that the leftover-data in the list needs 1-more-insert to clear out.

For this example: Someone will forget to purge the array at some point, and (inexplicably) loose the contents of the last batch...

I would recommend to only apply this trick when you Know it is going to make a Big Difference, and when your team is capable of understanding the additional (brain-)work.

Alternatively, you can search for existing solutions. Certain DataFrames for python may already solve this problem for you. The pandas-dataframe (link) looked promising, but on first-search it did not provide exactly what we were looking for.

Further items to explore..

Record-types. The equivalent of what PL/SQL has: EMP%TYPE could help in defining a structure to hold data. It could make the lists easier to manage and it can do some data-checks before adding data to the list. It would reduce potential for errors on the actual insert. Maybe Later.

Data-Frames or similar toolkits might "do the work for us". For java there are JOOQ and Hibernate. Python has several DataFrame options, such as Pandas and Apache-pySpark and some of those may have potential. Maybe Later.

Geeky: How big can the array be (in nr-records and/or in memory-footprint) before it shows signs of deterioration? For the moment, any number above, say, 100 will clearly benefit the system by reducing RoundTrips and overhead. But is there is an optimun or some upper-limit. Maybe Later.

Summary: Batch-Processing (array-processing) Works !

From this (over-simplified) testrun, I want to point out the Two Main Benefits of Array-Processing:

1. The program managed to insert 150x more records in the same 10sec interval. That is a clear increase in capacity for the app-program. The biggest benefit is in reducing the overhead, the call- and roundtrip-time per record.

2. The consumption of DB-resources on the RDBMS-side is much more efficient as well. Because the RDBMS can now handle "bigger chunks" per call, it spends less time on the same amount of "ingest data". This Benefits the RDBMS as well.

The numbers on this test Really Surprised me. Again. Despite using systems with only single-digit-millisec latency. And I knew RTs were costly, I've seen + fixed this kind of problem before. But I didnt expect the difference would be This Big.

This problem is as old as the app-rdbms dichotomy. And yet we dont seem to learn.

This test in particular also illustrates how un-necessary RoundTrips can slow down Both the App and the RDBMS: not just by losing time waiting for latency, but also from the incurred additional processing.

RoundTrips are the Next Important Challenge in IT...

This test demonstrated: Both components, the Application and the RDBMS, Gain from batch- or array-processing and reduced RoundTrips.

-- -- -- -- -- End of this blogpost, for Now -- -- -- -- --

Appendix 1: links to Scripts.

tst_ins_labels.sql : set up the datamodel.

tst_ins_label.py : the demo program with two while loops

You need following "importable files" to run the tst_ins_label.py program:

ora_login.py : the login utility (uses dotenv) and the session-info.

prefix.py : contains pp ( *argv ) to print to stdout with timing info.

duration.py : my stopwatch, time-measurement for python programs.

.env : used by dotenv to store + get credentials and other info.

And to verify the results, you can use:

tst_2arr.sql : view SQL in shared_pool, and count records in src_item_label.

Feel free to copy or even re-type.

Re-use of code is often a myth, unless you have typed or at least modified the code yourself.

-- -- -- -- -- End of this blogpost, for Real -- -- -- -- --